Tianqin Li

The ability for the human mind to logically reason has been a key distinguishing feature to our species success. Our capacity to distill our observations of the world into discrete composable concepts enables us to extract critical information from an ocean of noise and efficiently communicate our ideas. Using these composable prototypical concepts, we can further extend previously learned knowledge to abstract unseen observations and do more hierarchical planning and out-of-domain reasoning.

These attributes of the human mind stand in sharp contrast to modern artificial neural networks, which represent input as entangled and unstructured vectors, not robust to domain shift. This contrast motivates me to build smart machines that can (1) perform efficient and robust reasoning from raw sensory information; (2) learn with limited supervision.

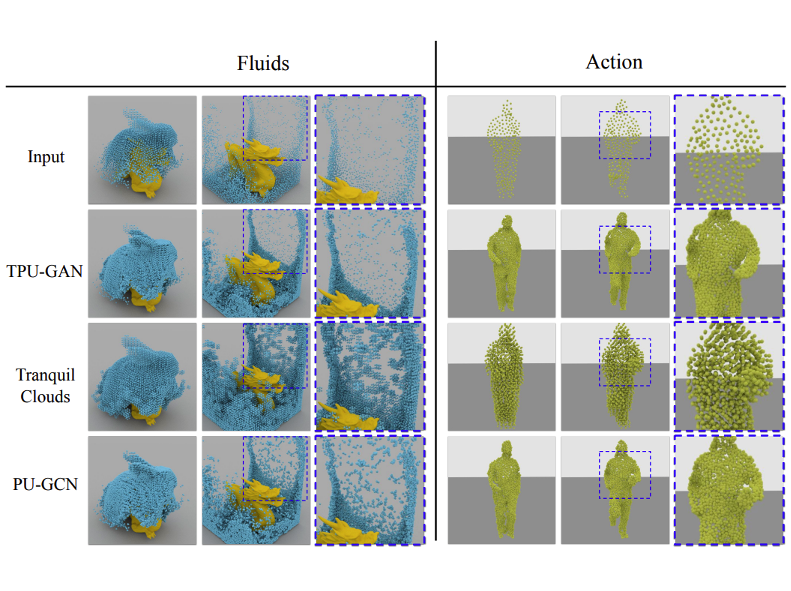

In addition, I'm also interested in various computer vision tasks, including 3D synthesis, dynamic point clound sequence enhancement, video prediction and interpolation, etc. Before joining CMU and doing AI research, I studied bioinformatics and developed softwares for synthetic biologists to automate the process of artificially engineering life.

Collected Quotes

"Shoot for the moon. Even if you miss, you'll land among the stars."

"Make everything as simple as possible, but not simpler."

"A problem well stated is a problem half-solved."

"Major discoveries are almost always preceded by bewildering, complex observations ... I always believed that the neocortex appeared complicated largely because we didn't understand it, and that it would appear relatively simple in hindsight. Once we knew the solution, we would look back and say, 'Oh, of course, why didn't we think of that?' When our research stalled or when I was told that the brain is too complicated to understand, I would imagine a future where brain theory was part of every high school curriculum. This kept me motivated."

News

Totally 400k compute credit available (and raising), join the community consists of Ph.D. researchers from CMU/MIT/Berkeley/Stanford/Caltech/USC etc. Contact me if you are a phd/postdoc working on AI and need supports/want to make a better AI future!

Publications

Intelligence Cubed: A Decentralized Modelverse for Democratizing AI

Jade Zheng*, Fernando Jia*, Florence Li*, Rebekah Jia*, Tianqin Li*

* Equal Contribution

Preprints 2025

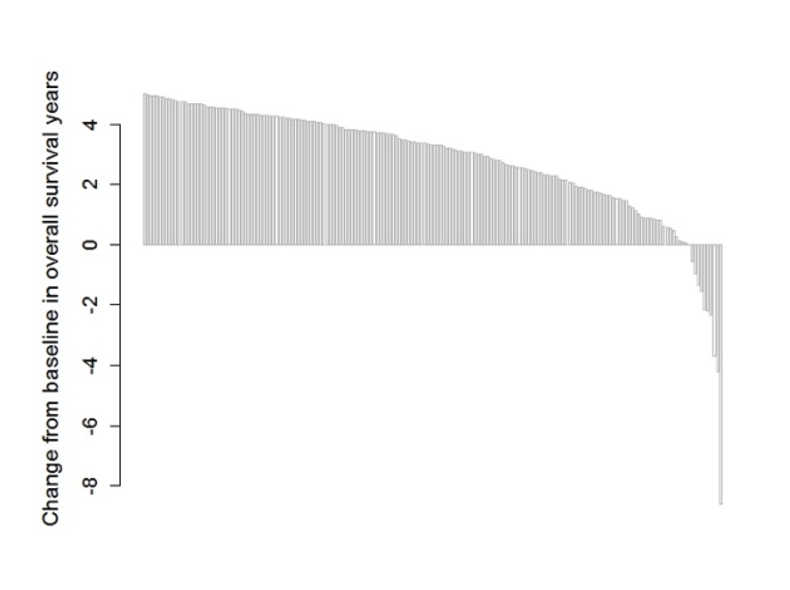

From Local Cues to Global Percepts: Emergent Gestalt Organization in Self-Supervised Vision Models

Tianqin Li, Ziqi Wen, Leiran Song, Jun Liu, Zhi Jing, Tai Sing Lee

Preprints 2025

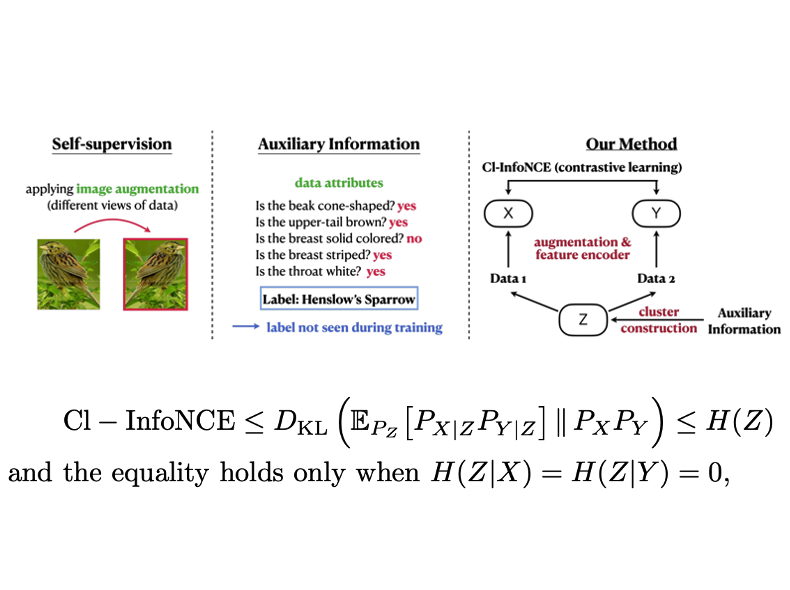

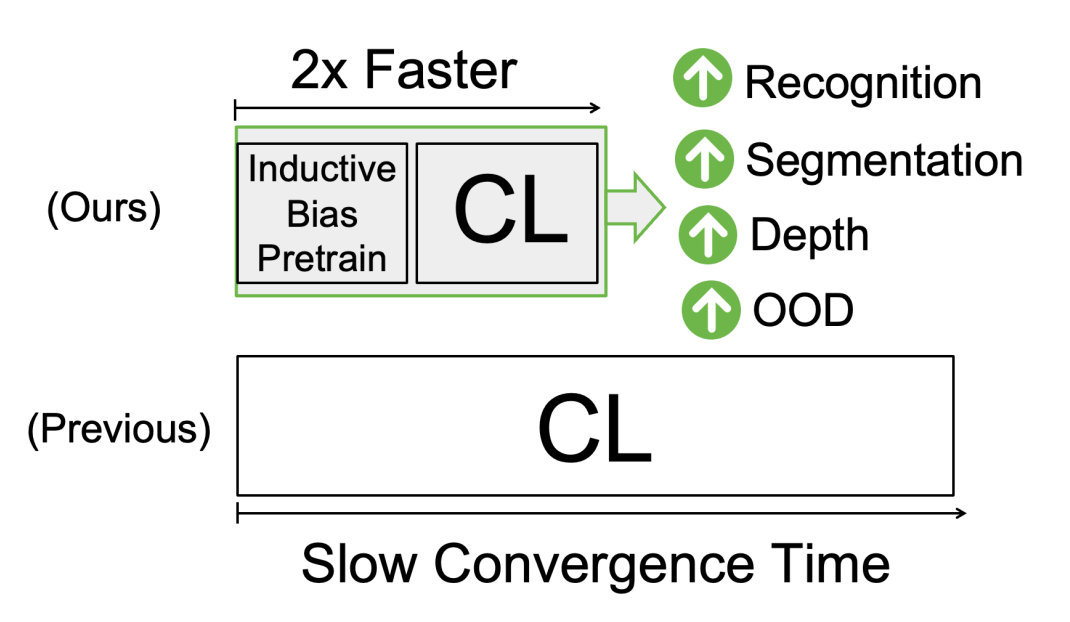

Perceptual Inductive Bias Is What You Need Before Contrastive Learning

Tianqin Li*, Junru Zhao*, Dunhan Jiang, Shenghao Wu, Alan Ramirez, Tai Sing Lee

* Equal Contribution

CVPR 2025

Paper | Project Page | Code

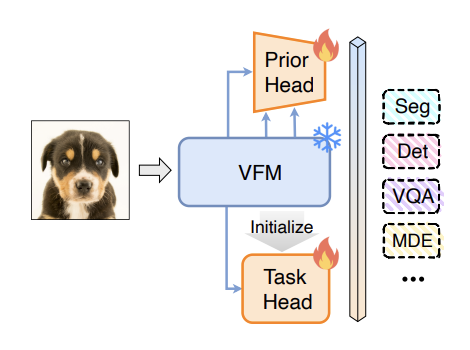

ViT-Split: Unleashing the Power of Vision Foundation Models via Efficient Splitting Heads

Yifan Li, Xin Li, Tianqin Li, Wenbin He, Yu Kong, Liu Ren

ICCV 2025

Paper | Project Page | Code

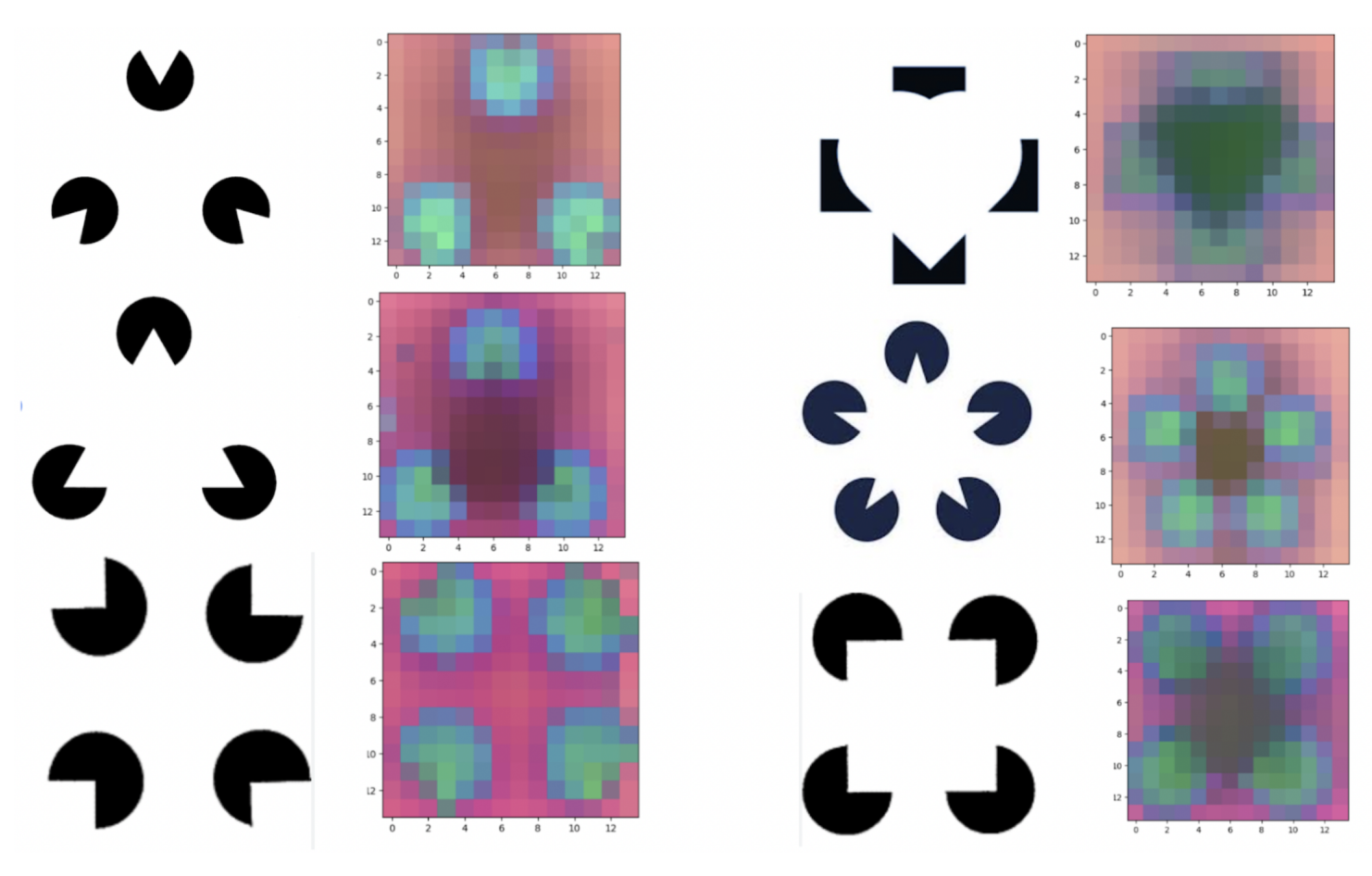

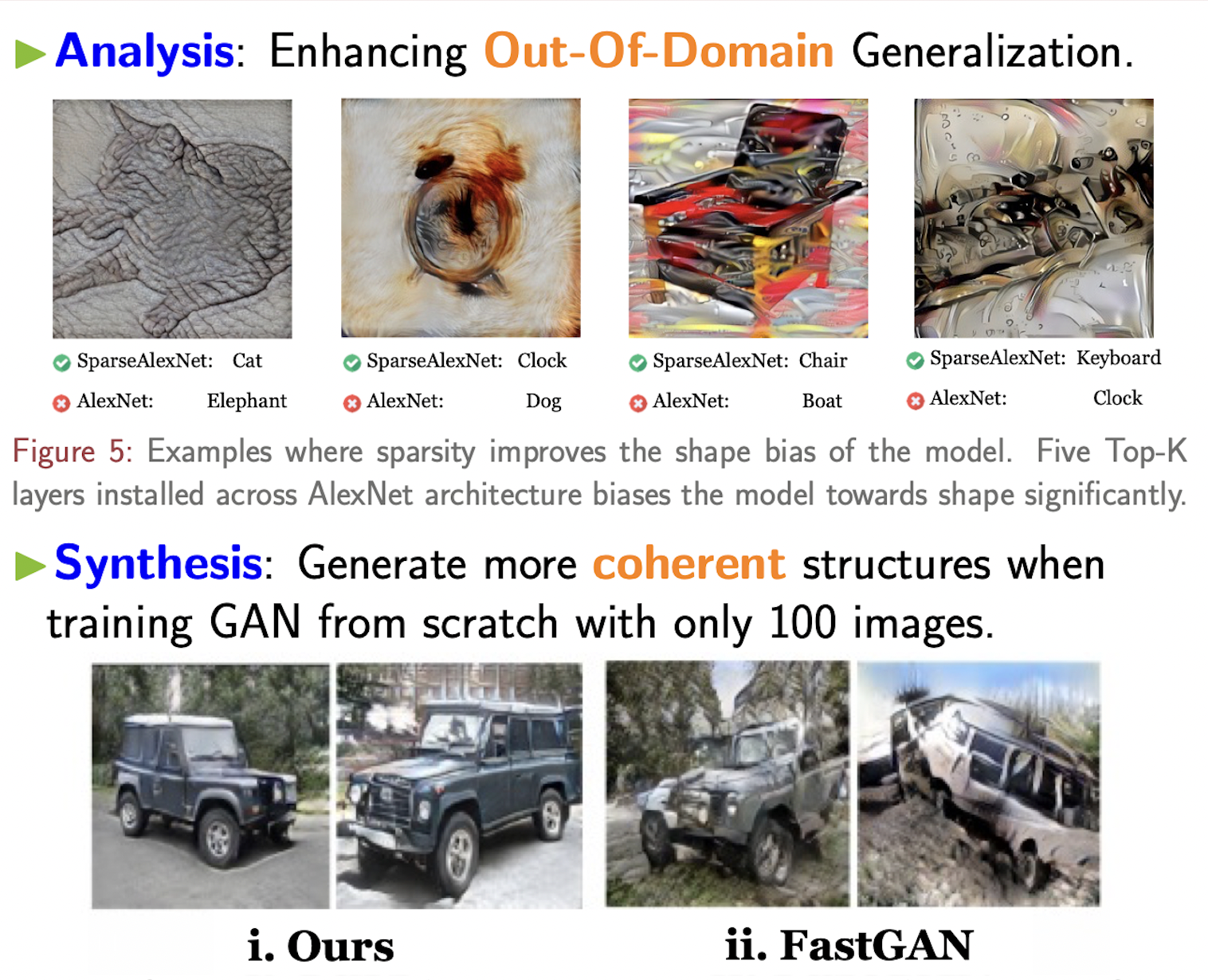

Emergence of Shape Bias in Convolotional Neural Networks through Activation Sparsity

Tianqin Li, Ziqi Wen, Yangfan Li, Tai Sing Lee.

NeurIPS [Oral] 2023 (selective 1%)

Paper | Project Page | Code